DeepSeek AI Statistics and Facts (2025)

Updated · Jan 29, 2025

TABLE OF CONTENTS

Introduction

DeepSeek AI Statistics: DeepSeek AI, founded by Liang Wenfeng in May 2023, has quickly emerged as a significant competitor in the global artificial intelligence market, particularly recognized for its cost-effective and large-scale models. Despite the strong presence of U.S.-based companies like OpenAI, DeepSeek made a notable entry into the international arena in January 2025. The company benefits from unique funding provided by High-Flyer, a quantitative hedge fund also established by Wenfeng. This support allows DeepSeek to focus on long-term projects without the influence of external investors.

The core team at DeepSeek is composed of young and talented graduates from top Chinese universities, providing a fresh perspective and a deep understanding of AI development. The company prioritizes technical skills over traditional experience in its hiring practices, fostering a culture of innovation and efficiency.

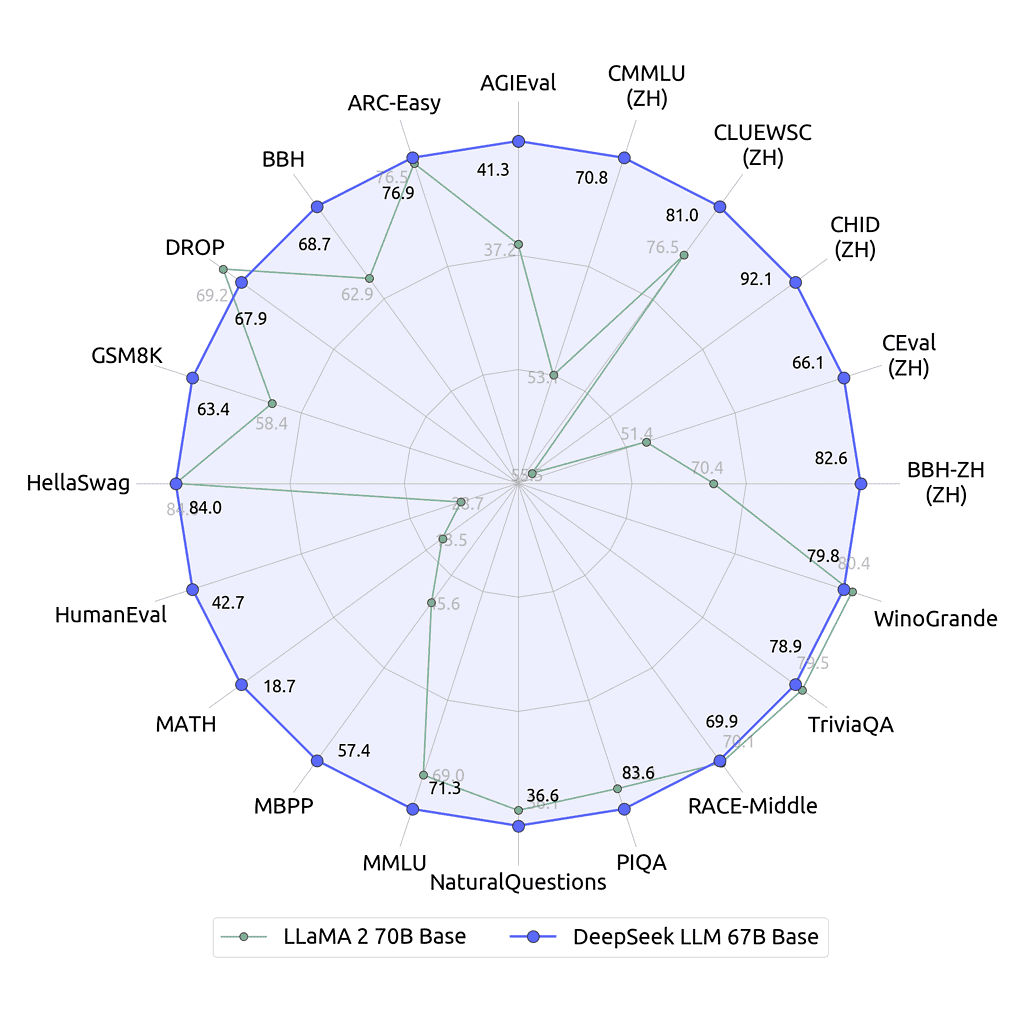

DeepSeek has achieved significant milestones, including the release of the DeepSeek Coder in November 2023, an open-source model designed for coding tasks. Following this, they launched the DeepSeek LLM, which features 67 billion parameters. In May 2024, they unveiled DeepSeek-V2, a model that sparked a price competition in the Chinese AI market due to its affordability and impressive performance. The success of this model led major Chinese tech companies to lower their prices in order to remain competitive.

(Source: github.com/deepseek-ai/DeepSeek-LLM)

The more advanced DeepSeek-Coder-V2 has been introduced, boasting 236 billion parameters and a context length capacity of up to 128,000 tokens. This model is available via an API, priced at USD 0.14 per million input tokens and USD 0.28 per million output tokens. This pricing structure highlights the company’s commitment to providing accessible and efficient AI solutions.

Editor’s Choice

- DeepSeek AI was founded in May 2023 in China and is only 20 months old.

- The pricing for DeepSeek models is significantly lower than similar OpenAI models, costing USD 2.19 per million output tokens, which is 1/30th the price of OpenAI’s 01 model at USD 60.00.

- On January 27, 2025, DeepSeek’s app became number 1 in the Apple App Store, surpassing ChatGPT, which held the number 2 spot.

- As of January 28, 2025, the app has been downloaded 2.6 million times.

- DeepSeek employs approximately 200 people, compared to OpenAI’s workforce of 3,500.

- The latest model from DeepSeek, the DeepSeek-V3, can scale up to 671 billion parameters.

- The training costs for DeepSeek models are about 1/10th those of comparable Western models.

- Most of DeepSeek’s models are licensed under the MIT license.

- The launch of DeepSeek’s latest AI model in January 2025 not only drew international attention but also triggered a global tech sell-off, risking USD 1 trillion in market capitalization and causing a 13% pre-market drop in Nvidia stock.

- DeepSeek has published 68 research papers on arXiv.

- In the chatbot competition arena, DeepSeek is ranked 4th with a score of 1357, just behind ChatGPT-4o at the 3rd place.

- As of January 27, 2025, DeepSeek has 349,800 followers on X.com.

- DeepSeek’s AI Assistant on the Google Play Store has surpassed 10 million downloads and has achieved a rating of 4.6 stars based on 4,190 reviews.

- DeepSeek has an estimated user base of 5-6 million, according to their user analysis.

- From October 2024 to December 2024, deepseek.com received a total of 18.92 million visits worldwide, marking a significant increase of 163.53% from the previous month, according to SimilarWeb data.

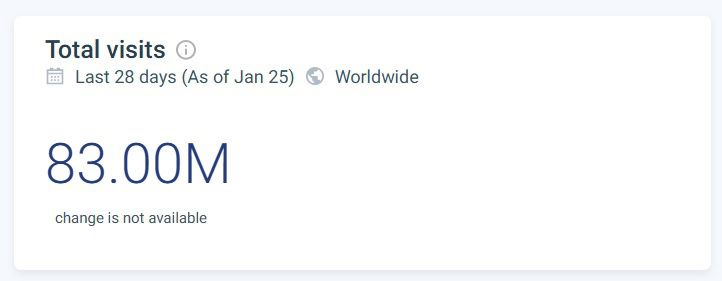

- In the last 28 days, as of January 25, worldwide traffic for DeepSeek reached 83 million visits, with 27% of the traffic coming from China, according to SimilarWeb.

Facts About DeepSeek AI

- DeepSeek AI was founded in May 2023 as a spin-off from the High-Flyer hedge fund, which had peak assets of approximately USD 15 billion, providing robust funding for DeepSeek.

- DeepSeek has equipped itself with 10,000 Nvidia H100 GPUs to ensure technological competitiveness, particularly when export controls tightened.

- The majority of DeepSeek’s researchers are recent graduates, enhancing the company’s ability to innovate rapidly.

- DeepSeek’s research heavily relies on full reinforcement learning, notably in its R1-Zero model, emphasizing advanced reasoning in areas such as mathematics and coding.

- The company has implemented a Multi-Head Latent Attention (MLA) system and a Mixture-of-Experts (MoE) configuration, which improve training speed and reduce computational costs.

- DeepSeek-V3 boasts 671 billion parameters, positioning it to compete with top-tier Western large language models (LLMs).

- The estimated training cost for DeepSeek-V3 is USD 5.5 million, significantly lower than typical costs for similar models developed by major tech companies.

- DeepSeek offers distilled model variants, such as “R1-Distill,” which allows users with limited hardware to access advanced AI capabilities.

- All DeepSeek releases are MIT-licensed, encouraging global development and commercial use of its technology.

- DeepSeek-R1’s API is priced at USD 0.55 per million input tokens, substantially lower than the USD 15 charged by some U.S. competitors.

- DeepSeek’s competitive pricing strategy has compelled major Chinese tech companies like Alibaba, Baidu, and Tencent to reduce their AI model prices.

- DeepSeek places a strong emphasis on fundamental research and ‘moonshot’ projects, akin to the early ambitions of OpenAI.

- The company has developed strategies to counter U.S. export controls, including custom GPU communication and memory optimizations.

- Researchers at DeepSeek often view their contributions as enhancing China’s global standing in AI, combining national pride with scientific pursuit.

- Global media outlets such as Wired and Forbes have highlighted DeepSeek’s technological innovations and efficiency.

- DeepSeek’s focus on scalable, cost-effective architectures in reinforcement learning could significantly influence the future of the global LLM market.

DeepSeek AI Users Statistics

- DeepSeek AI is estimated to have a total user base of between 5-6 million users worldwide, based on a cross-data analysis.

- As of January 28, 2025, DeepSeek AI’s apps have been downloaded at least 2.6 million times, reflecting its number 1 ranking in the Apple App Store in many countries on that date.

- Currently, there is no public data available that provides the exact number of users for DeepSeek AI.

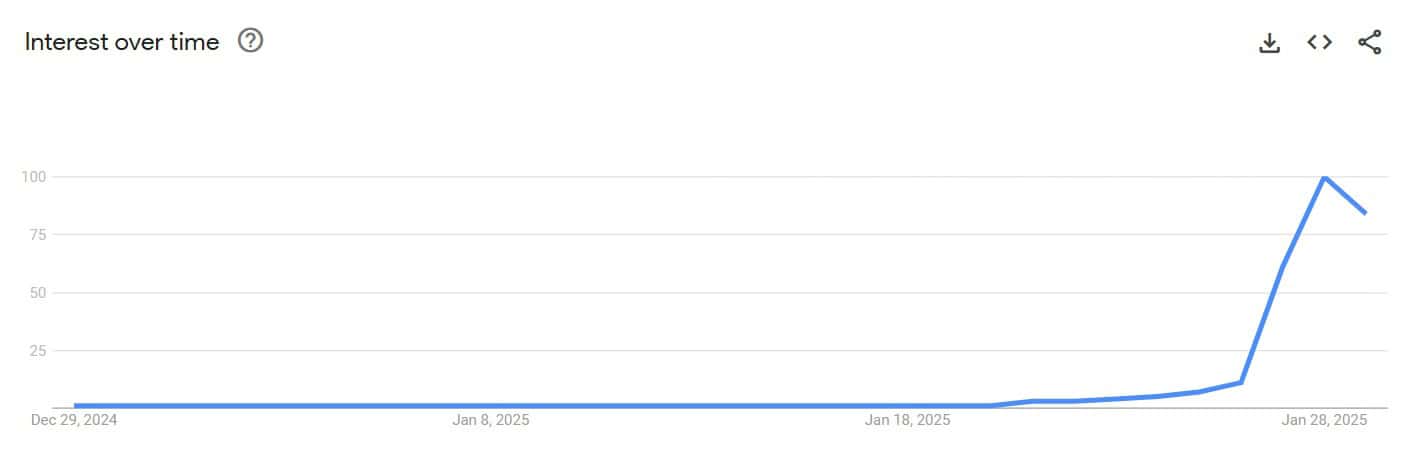

(DeepSeek Ai Google Trend)

DeepSeek AI Traffic and Engagement Statistics

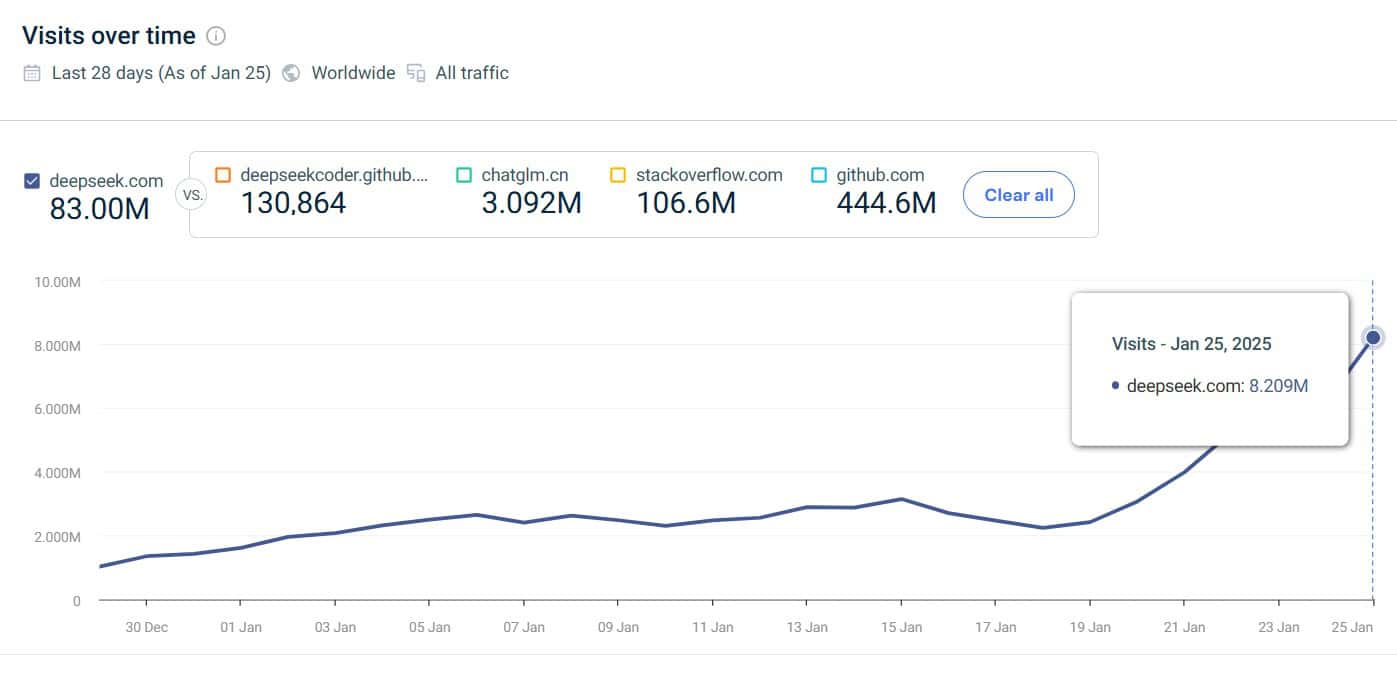

- From October 2024 to December 2024, deepseek.com received a total of 18.92 million visits, marking an increase of 163.53% from the previous month, according to SimilarWeb.

(Source: similarweb.com)

- In the last 28 days as of January 25, worldwide traffic for deepseek.com reached 83 million visits, with 27% of the traffic coming from China.

- The global rank of deepseek.com is #566, while its rank in China is #78.

- Daily unique visitors to the site average 1.707 million.

- The average visit duration on deepseek.com is 6 minutes and 13 seconds.

- The site has an average of 3.87 pages per visit.

- The bounce rate for deepseek.com is 35.94%.

(Source: similarweb.com)

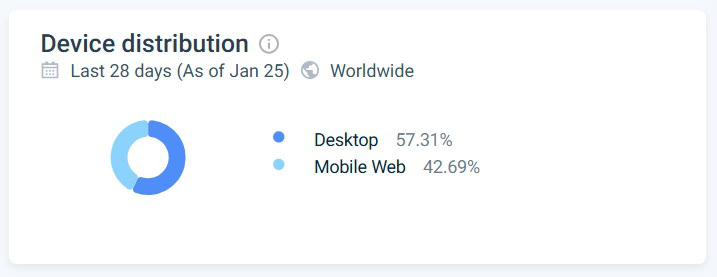

Device Distribution Statistics

- 57.31% of visits to the deepseek.com website are from desktop devices.

- 42.56% of visits come from mobile web devices.

Geographical Traffic Distribution Statistics

- China accounts for 27% of the total traffic, making it the top source of visitors.

- Egypt contributes 10.28% to the site’s traffic, ranking as the second-largest source.

- The United States is the third-largest traffic source, providing 6.58% of the visits.

- Russia follows, generating 4.73% of the website’s traffic.

- India contributes 4.22%, making it the fifth-largest source of traffic to the site.

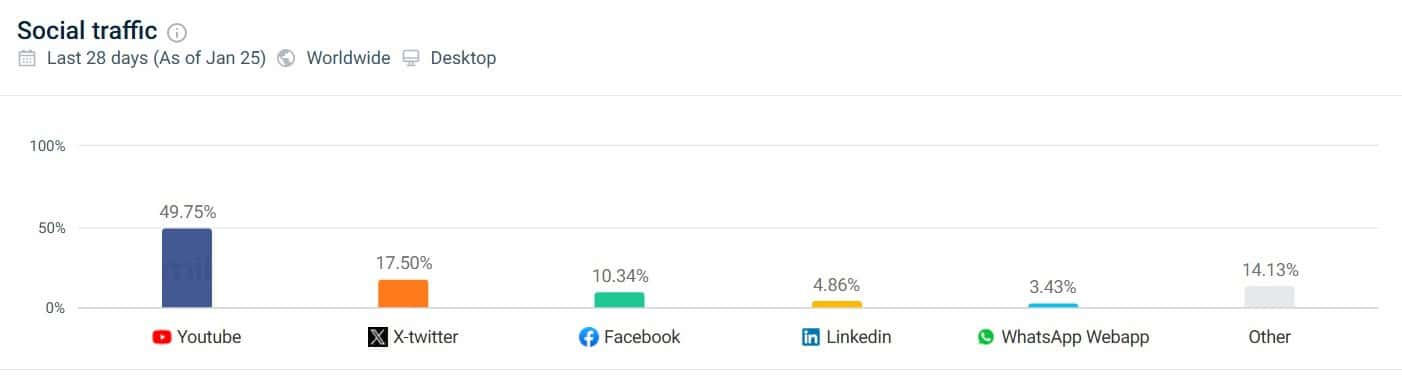

Social Media Traffic Statistics

- YouTube accounts for the highest share of user engagement at 49.75%.

- X (formerly known as Twitter) follows with 17.50% of the engagement.

- Facebook captures 10.34% of user interactions.

- LinkedIn is utilized by 4.86% of users.

- WhatsApp Webapp is used by 3.43% of the users.

- Other platforms collectively make up 14.13% of the user engagement.

DeepSeek AI’s TimeLine

- May 2023: DeepSeek AI is founded by Liang Wenfeng, evolving from the High-Flyer’s Fire-Flyer AI research branch.

- November 2023: DeepSeek releases its first open-source, code-focused model, DeepSeek Coder.

- Early 2024: The company introduces the DeepSeek LLM with 67 billion parameters, initiating a pricing competition with major Chinese technology companies.

- May 2024: Launch of DeepSeek-V2, which is recognized for its robust performance and cost-effective training.

- Late 2024: Release of DeepSeek-Coder-V2, which features 236 billion parameters and a high context window capable of processing up to 128,000 tokens.

- Early 2025: DeepSeek debuts the DeepSeek-V3 with 671 billion parameters alongside DeepSeek-R1, which focuses on advanced reasoning tasks and poses a challenge to OpenAI’s o1 model.

OpenAI vs. DeepSeek AI

Founding Year and Founders

- DeepSeek AI was founded in 2023 by Liang Wenfeng, who initially engaged in AI and algorithm-based trading through the hedge fund High-Flyer, which later expanded into broader AI research.

- OpenAI was established in 2015 by Elon Musk, Sam Altman, and other prominent figures, starting as a non-profit organization dedicated to developing artificial general intelligence (AGI) to benefit all of humanity.

Mission and Development Focus

- DeepSeek emphasizes open-source development, with the aim of making advanced AI technologies accessible to a broader audience. The company was born from a desire to expand the scope of AI applications beyond financial trading.

- OpenAI initially focused on ensuring AGI’s safe and beneficial deployment. It later transitioned to a capped-profit model to secure the funding necessary for advancing its research, which included forming strategic partnerships, such as with Microsoft.

Model Development and Costs

- DeepSeek AI’s DeepSeek-R1 Model: Developed with a focus on efficiency, the DeepSeek-R1 model required less than USD 6 million in computing resources for training. The company used innovative training methods and optimized algorithms to achieve high performance at lower costs.

- OpenAI’s GPT-4 Model: In contrast, the development costs for OpenAI’s GPT-4 model are significantly higher, with estimates suggesting expenditures in the range of hundreds of millions of dollars. This reflects the extensive computational resources and data that GPT-4 required for its training.

Mathematical Reasoning

- DeepSeek-R1 excels in mathematical reasoning and coding tasks, particularly notable in the American Invitational Mathematics Examination (AIME) 2024 benchmark.

- DeepSeek-R1 achieved a 79.8% accuracy in the AIME 2024 benchmark.

- OpenAI’s models, such as those within the GPT-4 series, scored slightly lower with 79.2% accuracy in the same benchmark.

Versatility and General-Purpose Capabilities

- OpenAI’s models are known for their versatility and broad applicability across various tasks.

- They perform exceptionally well in diverse domains like natural language understanding, translation, and creative writing.

- In the Massive Multitask Language Understanding (MMLU) benchmark, OpenAI’s models outperform DeepSeek-R1, demonstrating a broader knowledge base and higher proficiency in multiple subjects.

Open-Source vs. Proprietary Models

- DeepSeek AI adopts an open-source approach, fully releasing its DeepSeek-R1 model to the public. This allows developers globally to freely access, modify, and implement the model, fostering collaboration and speeding up innovation within the AI community.

- OpenAI initially embraced open research but has shifted towards a more proprietary model in recent years. The organization offers access to models like GPT-4 through commercial partnerships and paid APIs, allowing it to maintain control over how its models are deployed and used. This strategy ensures that safety and ethical standards are met in the application of their technology.

Market Impact and Reception

- Market Impact of DeepSeek-R1: The release of DeepSeek-R1 has notably influenced the tech industry due to its cost-effective development and open-source nature, challenging existing market paradigms.

- Reactions in Tech Stocks: The introduction of DeepSeek AI’s models led to a downturn in major U.S. tech stocks, including significant declines for companies like Nvidia, as investors reconsidered the competitive landscape in AI technology.

- OpenAI’s Industry Dominance: OpenAI remains a leading entity in the AI sector, with its models being widely adopted across various industries.

- OpenAI’s Partnerships: Notably, OpenAI’s collaboration with Microsoft integrates its technology into the Azure AI platform, broadening its reach and influence in the tech industry.

- DeepSeek’s Focus: DeepSeek AI prioritizes the development of cost-effective, open-source AI models, specializing in tasks that require advanced reasoning and coding abilities.

- OpenAI’s Approach: In contrast, OpenAI focuses on creating versatile, high-performance models that are broadly applicable across many sectors, employing a more proprietary model.

DeepSeek vs OpenAI Comparison Table

(Source: play.ht)

Conclusion

Sources

Pramod Pawar brings over a decade of SEO expertise to his role as the co-founder of 11Press and Prudour Market Research firm. A B.E. IT graduate from Shivaji University, Pramod has honed his skills in analyzing and writing about statistics pertinent to technology and science. His deep understanding of digital strategies enhances the impactful insights he provides through his work. Outside of his professional endeavors, Pramod enjoys playing cricket and delving into books across various genres, enriching his knowledge and staying inspired. His diverse experiences and interests fuel his innovative approach to statistical research and content creation.